Our Data, Our Purposes!

A conversation with Helen Hester

Different feminist positions have treated women as passive victims of technology, understanding the latter as a project of patriarchal domination, without paying enough attention to the agency of women themselves and their capacity to reappropriate it. In this sense, xenofeminism is presented as a renovating current insofar as it recognizes the emancipatory power (limited but real) of technology and aims to build concrete strategies to match our times of accelerated virtualization. What it proposes is a politics of scales and transits between multiple levels of thinking and action.

Based on some of its premises, this conversation will address the way in which the body has acquired new scales of reality and habitability in digital environments. Taking the domestic sphere as a starting point, we will try to think of technical infrastructures as the home of our data bodies. Just as the home has been the locus of social reproduction that perpetuates normative values, technical infrastructures in turn generate algorithmic normativities that perpetuate the established social order.

The construction and design of a space (be it domestic or digital) constitutes the limitations and possibilities of a body to move and inhabit it. How can we promote the autonomy of our “data bodies” in relation to the technical spaces it inhabits? To what extent can we reimagine the Internet infrastructure to reappropriate it as a common good and avoid its verticality and privatization? How can we generate network alliances that constitute significant links? Is it possible to find a balanced relationship between the different scales of corporeality and habitability that go from the body to the cloud?

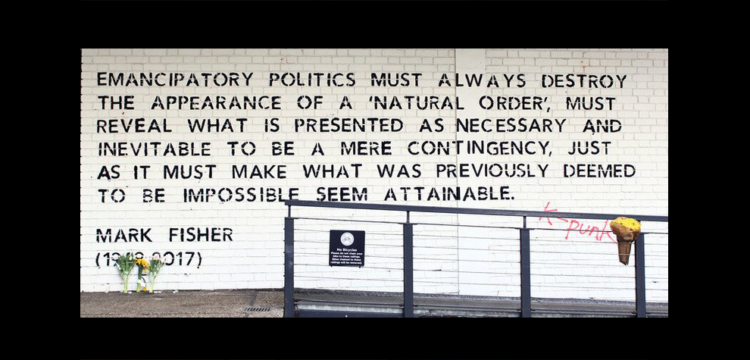

On the basis of Dolores Hayden’s research on the history of domestic innovations (The Grand Domestic Revolution) and Mark Fisher’s idea of “capitalist realism”, you talk about “domestic realism” as “the unwillingness or inability to re-imagine the spaces of social reproduction.”(…) “Domestic realism names the phenomenon by which the isolated and individualized small dwelling (and the concomitant privatization of household labor) becomes so accepted and commonplace that it is nearly impossible to imagine life being organized in any other way.” However, you claim that the domestic space can also be the place from which to launch emancipatory projects, since reconfigurations of this sphere are possible both in terms of the spatial distribution and the relational dynamics they establish.

If we make the analogy between the house as the place where bodies live and digital platforms (social media, Google or Amazon services) as the place where our “data body” lives, we can observe a privatization of the design and management of these spaces where our online activity takes place. And, due to the great power held by the technological oligopoly compared to the little power that public institutions have in this area, we can also observe a certain “cloud realism”, a tendency to believe that it is not possible to change or reconfigure these spaces or build new ones.

How could your ideas on domestic realism help us to re-imagine the digital spaces we inhabit? Do you think that the State, apart from regulating the processing and use of data by these companies, should also create a technical infrastructure which would give rise to more collectivised ways of inhabiting virtual spaces? Or should we favour the collective generation of autonomous and community-based technical infrastructures?

It’s interesting that you make the link between domestic spaces and computational technologies – the computer is, amongst other things, a domestic technology. We have a tendency to concentrate on the cutting-edge of developments in things like AI, meaning that we forget that the computer has for some years been a fairly mundane technology of social reproduction (albeit an unevenly distributed one). It is used in a lot of everyday tasks—scheduling, communicating, organizing—processes of what we might call cyborg caregiving . Beyond that, though, it’s increasingly used for the most basic and fundamental activities of daily survival. Whilst we are understandably directing a great deal of critical attention to new innovations and their potential consequences, we are seeing ever-greater lock-in in terms of more established technologies and interfaces—the digital spaces you talk about are increasingly the spaces in which the basic tasks of everyday life take place. It is becoming ever-more difficult to access, for example, corporate or governmental help and support in a non-digitally mediated fashion. If one needs to apply for benefits, or make an insurance claim, or query a bank payment, or inquire about a delivery that never arrived, or arrange a doctor’s appointment, or schedule the installation or repair of a phone line, or correct an error in a utility bill… increasingly, in the wealthier nations, these processes demand the navigation of online systems. These are often experienced as obstructive technologies—blockages in terms of reaching the support that we need and that they are ostensibly intended to help us access.

It’s not just that the “user”—the human being with a need to be met—is required to engage with digital interfaces and networks in order to access assistance. The flip side of this is that decisions about the provision of assistance are also increasingly being made and communicated by online systems—the case of algorithmic decision making regarding eligibility for state benefits being one example. As Precarity Lab point out, “Transferring authority to make decisions about public assistance eligibility from people to machines makes negotiating with those accountable for making these determinations seem impossible. The person abandoned by the automated welfare state confronts a new object, the clunky state interface, and is consequently no longer a subject precisely because they have been barred from predication, from conversation and negotiation.” It’s little wonder people have a sense of “cloud realism” in these circumstances! Automated decision making is experienced as fundamentally disempowering.

We see something similar going on in the case of algorithmic management and black box labour management systems—again, knowledge and the means of challenging the way things are organized are technologically mediated in a new way, making the means of resistance feel less accessible. In the US, there are allegations that some algorithmic management systems are even targeting workers’ self-organization, with elements of anti-union surveillance built-in. As Callum Cant notes in his PhD work on the topic, “The augmentation of human supervision by information technology allows for both increases in the intensity of the supervisory regime and the complexity of the production process.” This isn’t a completely new element of management, of course—the idea that representatives of either capital or the state are amenable to reason and available to be negotiated with is perhaps in itself a comforting fantasy—but it does represent a development (and arguably an exacerbation) of existing tendencies. It is a new frontier for systems of control, and further constrains the possibilities for resistance to and refusal of work. That is to say, it demands new tactics.

And, encouragingly, we are seeing this beginning to happen when it comes to algorithmic management—new techniques of worker resistance are emerging, both organized and disorganized. Cant’s research ultimately identifies “a structural capacity for novel approaches to self-organization” emerging in response to contemporary regimes of control, including in the case of platform workers. Informal work groups are organizing via digital interfaces, as well as being organized by them—many-to-many communication networks can be seen to facilitate solidarity on a new scale, and have already proved particularly helpful in fostering the emergence of solidarity across a geographically dispersed workforce. (This is a key point as experiences of working from home and furlough during the pandemic furthers the spatial separation of workers with shared interests; there are vital lessons to be learned here for people in other types of jobs, and perhaps further opportunities for coordination across sectors). So, platform laborers have found opportunities to resist algorithmic management—we can identify pockets of worker militancy, strategizing, and real inventiveness. This suggests a potential orientation for platform users as well, perhaps – an orientation in opposition to “cloud realism” in which the future and all its techno-social conditions are still up for grabs.

What I’ve touched on here is the idea of internet as infrastructure – when one thinks of it like that, the need for mechanisms to ensure access and accountability seems quite clear. Under current social, technical, and political conditions such mechanisms are, I think, most likely to emerge from and via the state. I’m not talking about policing specific online content here; I’m thinking more generally about basic provision and about the process of decommodification—taking the internet, as a technology that we currently pay for, and reframing it as a social entitlement or public service (with all the potential benefits and pitfalls that implies).

This is not to downplay the value of fostering autonomous infrastructures, by any means—I think empowering as many people as possible to seize the means of digital production is an admirable goal. Being able to engineer alternatives that are nimble and responsive to the needs of particular collectives and communities is a huge deal. But as a goal, it favors those who are already invested in a hands-on approach to changing and intervening within the digital landscape—those who have the skills to look under the hood of a particular technical system, or who have the means at hand to learn and develop these skills. Not everybody is in a position to do this – not everybody has the time or the resources. As I put it in my recent book, “The ultimate aim of a xenofeminist politics of technology should be to transform political systems and disciplinary structures themselves, so that autonomy does not always have to be craftily, covertly, and repeatedly seized (given that the requirement of such seizure, if imposed upon an unwilling subject, takes the form of a burden rather than an emancipatory exercise of freedom).”

If we think of data as a second body, we can ask ourselves: do our data have reproductive capacity? Somehow yes—they give rise to the generation and birth of Artificial Intelligences. Any AI, no matter how rudimentary, needs large amounts of data to be trained. One of the most extended applications of AI is that referred to the Smart City; the city constitutes another scale of habitability mediated by digital technologies.

We found similarities between the lack of bodily autonomy and exclusion from the decision-making process that you discuss in relation to the women’s health movement with what is happening now in relation to digital technologies. The relationship between those who were in charge of providing health care and those who received it was profoundly unequal and marked by gender issues; and, in the same way, today the relationship between those who are in charge of providing technical solutions for smart cities and those citizens to whom they are addressed is also extremely unequal and marked by exclusion from decision-making in the development of AI.

Do our data have reproductive capacity and what degree of autonomy do we have with respect to it? As subjects of these data bodies, can we pose a right to “abortion” if we decide that we do not want to give rise to the development of certain types of artificial intelligence? Can we collectively decide what model of city we want to be built and determine for what purposes we give up the reproductive right of our data?

There are some provocative images and ideas here, but we need to be clear about the different stakes involved. If there is a data body, it is certainly not immaterial (the cloud, despite its ethereal-sounding name, is neither free-floating nor disembodied—its tethered to the physical infrastructure that makes it possible, including the bodies of the people who manufacture the technologies on which it runs and who obtain the resources on which that manufacturing depends—the wetware of the worker). However, the data body is differently material, and it would be a mistake to downplay this specificity. We mustn’t be glib about gestation and birth when people (particularly Black and indigenous women) are dying for want of adequate and accessible pre-, peri-, and post-natal health care; when we’re still dealing with the fallout from—and in some cases, the ongoing practice of—involuntary sterilizations (a recent class action lawsuit in Canada indicates that indigenous people were people being forced, coerced, and tricked into undergoing sterilization procedures in Saskatchewan as late as 2017); and when people are suffering miscarriages, involuntary infertility, and diseases of the reproductive system (such as uterine cancer) as a result of their exposure to toxic working conditions. These conditions are in fact particularly prevalent in the electronics industry—semiconductor manufacture, for example—and in e-waste disposal. The labour processes that make the existence and of our “data bodies” possible are also, under present conditions, decimating the health and the lives of those who perform it—figurative data bodies are being reproduced at the expense of the reproduction of literal, flesh-and-blood bodies.

So, even though both are soaked in blood—frequently, but not exclusively, women’s blood—I’m not sure its necessarily productive to bring biological reproduction and data reproduction into conversation in quite this way. It conflates too much, it elides significant details, and it risks unnecessary misunderstanding.

That being said, however, I can see how the idea of bodily autonomy might have been useful to you in the framing of this question. The idea of a right to self-sovereignty, the emphasis on agency and informed consent, collective resistance to both direct and indirect forms of compulsion—all of these are transferable ideas, concepts with a wide sphere of relevance and possible application. Each could feasibly be tracked over as a guiding principle for what you’re describing as “data body autonomy”. Consent, for example, is already an idea widely used to discuss what happens to and with our data; when the United States House of Representatives voted to repeal laws preventing Internet Service Providers from selling customers’ data without their knowledge and approval in 2017, this was generally understood precisely as an issue of consent.

There are assorted (and often imperfect) ways to help protect our data and to practice data self-defense—the use of VPNs being one obvious and increasingly widespread example; the idea of obfuscation (or of generating virtual noise so as to hinder the data collection process and reduce its accuracy) is another. These will likely remain important tools, even if we also concentrate or reimagining and re-engineering more emancipatory technical systems. What your question points to, though, is the idea that there might be cases in which we—as agential political subjects—choose to share our data; cases in which we would like to see the informational by-products of our digitized existence put to productive use in building a better world. This implies a more targeted approach to data collection—not simply hovering up any and all information for the sake of it, but thinking instead about what we need our data to do—how it can be put to use for us, on our behalf, for the sake of people not profit. This might also involve trying to pre-empt some of the ways in which data sets could feasibly be misused or appropriated—teasing out in advance not only the positive applications, but also the possible problems that gathering and having access to this data might provoke, so that we can frankly reflect on this and collectively weigh the implications to the best of our current abilities. People should be informed about what kinds of data they are generating and of how this is being put to use, and should have both a stake and a say in the process—whether that’s via individualized opt-in/ opt-out mechanisms embedded in the interface, or via periodic referenda on the general terms of collective data usage, for example.

We might choose to contribute our data to ensure the smooth running of cities on a quotidian, operational basis—providing anonymized information to help ensure the equitable distribution and appropriate targeting of resources for sanitation, child care, housing and so on. We might decide to opt-in to anonymized data sharing regarding journeys on public transport networks, for example, the better to allow for the targeting of resources and the development of policy. (If people from less affluent areas of a city more regularly commute via two or more kinds of public transport, let’s say—tram, bus, public bicycle, or whatever—then perhaps fares could be redesigned in a more integrated way so as to avoid penalizing or overcharging them.) This isn’t flashy, but it is potentially quite helpful.

At the level of the city, I would argue that data should not simply be shared by residents—but should, wherever possible and appropriate, be shared with them, in the name of creating a kind of data commons. This could serve as a collective resource for the development of the kinds of community-based technical infrastructures you mentioned earlier, giving people access to information that might enable the emergence of autonomous emancipatory projects. The role of the municipality—as, if you like, a scale of administration—might be to use the possibilities afforded to it by its reach and position to help facilitate the emergence of a progressive data culture. This is not simply in terms of gathering information (enforcing principles of consent, privacy, and protection from harm, for example), but also in terms of archiving, preserving, managing, and securing it. The city could be responsible for rendering data maximally accessible for public use, ensuring:

- That it is visualized in a way that is comprehensible to different groups of people;

- That it is searchable in relatively intuitive ways, without the need for specialist software or training;

- That key findings are translated into clear and legible prose.

If we were to use your analogy of reproductive rights for the data body, one could describe this as moving from Our Bodies, Ourselves to Our Data, Our Purposes!

Xenofeminism is characterised by a positive (though not naive) view of the possibilities inherent in any technology and the ability to redirect it towards more just ends. In the case of surveillance technologies, widely criticized by authors such as Shoshana Zuboff, it is also possible to imagine a use of big data that is not focused on individual bodies and their behavior (f.e. in relation to consumption) but to detect patterns in economic cycles or to identify changes in geophysical processes such as those that have made it possible to become aware of the dimension of anthropogenic climate change, which as Benjamin Bratton points out is an epistemic achievement of planetary-scale computation. It has also proved useful for public health during the pandemic, with the development of tracking apps. What uses of mass data collection could have positive effects on a planetary scale?

The obvious example here, as you mention, is climate change research. I learned recently that, in terms of the mass use of computing power and data, climate change research is second only to nuclear bomb testing (which demonstrates something of our priorities, and of the variable uses to which our technical systems can be put; it really brings home the political and techno-material stakes involved here). A massive infrastructure has been set up to provide us with an understanding of climate change—one that is necessarily global in its scope. Something like the global average temperature is not information that can be simply or immediately obtained by going to a place and taking a single measurement; it obviously requires a huge amount of data from around the world, data that we obtain via satellites, with ships, using all sorts of things—the many and varied apparatuses of a necessarily global technological process. There are a huge number of factors that need to be taken into account with every measurement if we are to understand how the temperature is increasing globally to the best of our current abilities.

In this context, we might see mass data collection as a tool of what Donna Haraway calls “response-ability”—that is, of the simultaneous obligation and facility to take action—and as the foundation for self-sovereignty, or the quest for heightened collective understanding as an integral part of the democratic project.

The other example you mention comes from the realm of health care. When we talk about the mass collection of medical data, many of us will instinctively (and justifiably) feel quite defensive. It particularly brings home to us the significance of issues around privacy, surveillance, and the control that processes of technologically mediated data gathering enable. Will our data be sold? Is this information personally identifiable, and to whom will this sensitive information be made visible? Are we at risk of locking in a world in which our digital data has a direct effect upon things like our insurance premiums, our ability to access health care, and so on? It is crucial that we continue to ask these questions and that we make every effort to imagine the worst; pre-empting worst case scenarios can be a form of due diligence, and is a really important tool in efforts at harm reduction.

There is another side to this, though. We are already using a huge amount of data for things like diagnosis, research, and development. Our quest to find new drugs and medicines increasingly incorporates artificial intelligence, for example, along with all the data that requires. The current pandemic is a case in point—as you mention, one of the ways in which (some) countries have succeed in containing the virus is via the implementation of track and trace systems, and finding a means of integrating the various systems in use, or of enabling them to talk to each other when necessary, could generate productive effects. There are valuable applications for mass data gathering here—life-saving applications, in fact—but in order to foster an approach that is genuinely for the global public good, there needs to be certain conditions in place. The process has to be universally secure, democratic, accountable, and transparent. Imagining the orchestration of political cooperation on this global scale is arguably far more difficult than imagining the technical systems that might be involved. As ever, when dealing with techno-materiality, we find that the technical is as social as society is technical.